Artificial Intelligence at the Edge: How Edge Computing is Transforming AI Applications?

1. What Does Artificial Intelligence at the Edge Really Mean?

Artificial intelligence at the edge is rapidly transforming how intelligent systems interact with the world by enabling data processing closer to the source—where the data is actually generated.

Understanding Edge Computing

Edge computing is a decentralized computing model that brings computation and data storage closer to devices, instead of relying entirely on centralized cloud servers. This reduces latency, conserves bandwidth, and allows for real-time responses, which are crucial in time-sensitive applications.

In contrast, cloud computing typically requires data to be sent to remote servers for processing, which can introduce delays—especially when dealing with large datasets or limited connectivity. Edge computing solves this problem by processing data locally on devices like sensors, mobile phones, or embedded systems.

What is AI at the Edge?

“AI at the edge” refers to deploying artificial intelligence algorithms directly on edge devices. These models are typically lightweight versions of their cloud counterparts, designed to run with limited memory and computing power. This approach allows devices to make intelligent decisions instantly without needing to connect to the cloud.

This is especially important for applications where speed, privacy, or offline capability is critical.

Real-World Applications

Some common examples of edge at AI include:

- Smartphones using facial recognition or voice assistants without sending data to the cloud.

- IoT devices in smart homes and industrial automation, analysing sensor data on-site.

- Autonomous vehicles that process visual and sensor inputs in real time to make navigation decisions.

By enabling real-time, local processing, artificial intelligence at the edge is reshaping how we build responsive, efficient, and secure AI systems for the future.

2. How Does Edge and AI Work Together to Improve Performance?

Edge at AI is revolutionizing intelligent systems by dramatically improving performance through localized, real-time data processing.

Reducing Latency and Saving Bandwidth

One of the major performance advantages of combining edge computing with AI is reduced latency. Traditionally, AI models running in the cloud require sending data to a server for processing and then waiting for a response. This can cause delays, especially in time-sensitive applications like autonomous driving or real-time surveillance.

With edge computing, data is processed locally—right on the device—so decisions can be made instantly. This minimizes lag, improves user experience, and significantly reduces the need for constant internet connectivity. It also cuts down on bandwidth consumption, since only relevant or summarized data may be sent to the cloud.

Enhancing Data Privacy and Security

When sensitive data like video feeds, biometric information, or health metrics is processed on-device, it never needs to leave the local environment. This not only reduces the risk of data breaches but also helps organizations meet compliance standards for data privacy, such as GDPR or HIPAA.

Inference vs. Training at the Edge

AI systems typically go through two stages: training and inference. Training involves feeding large datasets into models to help them learn patterns, and it's usually done in powerful cloud environments.

Inference, on the other hand, is the stage where the trained model makes predictions. At the edge, inference allows devices to respond in real time—for example, a smart camera detecting motion or a drone identifying objects mid-flight.

Together, edge and AI optimize performance by bringing powerful, real-time intelligence directly to the device level.

3. What are the Key Use Cases of Artificial Intelligence at the Edge?

Artificial intelligence at the edge is transforming industries by enabling smart, fast, and localized decision-making. From healthcare to agriculture, edge AI delivers real-time intelligence where it’s needed most.

Healthcare: Remote Monitoring Devices

Edge AI allows wearable and in-home health devices to analyse patient data on the spot. These systems can detect critical conditions like abnormal heart rates, irregular breathing, or sudden falls. By processing this information locally, devices can alert caregivers or emergency services instantly—without needing a constant cloud connection.

Manufacturing: Predictive Maintenance

In industrial settings, edge-based sensors installed on machines track variables like vibration, temperature, and pressure. AI models running on the edge analyse this data in real time to predict equipment failures before they happen. This reduces unplanned downtime, extends machinery lifespan, and lowers maintenance costs.

Retail: Smart Cameras and Sensors

Retailers use edge at AI to power in-store analytics and improve customer experience. Smart cameras can count foot traffic, monitor inventory, and detect suspicious behaviour—all without streaming data to the cloud. This reduces bandwidth use while ensuring privacy and operational efficiency.

Agriculture: Drones and Edge Sensors

Modern farming increasingly depends on drones and soil sensors equipped with AI capabilities. These edge devices analyse field conditions, detect crop diseases, and monitor pest activity in real time. With localized data processing, farmers can make faster, more informed decisions that increase crop yield and reduce waste.

Transportation: Self-Driving Systems

Autonomous vehicles rely on artificial intelligence at the edge to make split-second driving decisions. Cameras, radar, and LiDAR sensors collect and process data on-board to identify obstacles, read signs, and navigate roads. This real-time inference is essential for safety and responsiveness, especially in unpredictable driving conditions.

These applications show how edge AI is enabling smarter, faster, and more context-aware solutions across critical industries.

4. What Technologies are Powering Edge and AI Innovation?

Edge at AI is evolving rapidly, thanks to a powerful combination of hardware, software, and connectivity technologies that make real-time intelligence possible outside of traditional cloud environments.

Hardware: Compact and Capable Edge Devices

Specialized hardware forms the foundation of edge AI systems. Devices like microcontrollers and edge-specific boards are designed to run AI models efficiently in constrained environments. Popular platforms such as NVIDIA Jetson and Google Coral enable developers to deploy advanced AI models on compact, power-efficient hardware. These systems support vision processing, speech recognition, and sensor data analysis directly on the device—without needing a connection to the cloud.

Software: Lightweight AI Frameworks

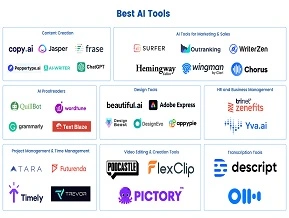

To support AI at the edge, software tools must be optimized for speed and low memory usage. Frameworks such as TinyML, TensorFlow Lite, and ONNX are designed to convert and compress complex machine learning models so they can run smoothly on edge hardware. In addition to these core frameworks, a variety of edge deployment tools help developers manage models, update them remotely, and monitor performance—enabling efficient, on-device AI deployment at scale.

Connectivity: Fast, Reliable Data Transfer

Reliable and high-speed connectivity is critical for edge devices that still need to send selective data to the cloud or communicate with other systems. Technologies like 5G and Wi-Fi 6 provide the necessary speed and bandwidth to support real-time applications, especially in scenarios like autonomous driving or smart cities. Low-power wireless protocols (e.g., Zigbee, LoRaWAN, BLE) are also widely used for IoT and edge environments where energy efficiency is key.

Together, these technologies are making it not only possible but highly effective—bringing AI capabilities into everything from industrial machines to consumer electronics, all while enhancing speed, efficiency, and privacy.

5. What are the Challenges of Deploying Artificial Intelligence at the Edge?

Deploying artificial intelligence at the edge comes with unique challenges that developers and organizations must overcome to unlock its full potential.

Limitations of Compute Power and Memory

Edge devices are often constrained by limited processing power and memory compared to cloud data centres. Running complex AI models locally requires significant optimization to fit these constraints. Developers must carefully balance model accuracy with size and speed, often relying on techniques like model pruning, quantization, or using lightweight architectures. These limitations can impact the type and complexity of AI tasks that can be performed on the edge.

Security Concerns in Decentralized Environments

Unlike centralized cloud systems, edge computing environments are inherently decentralized and often distributed across many devices and locations. This decentralization increases the attack surface for potential security threats. Protecting sensitive data processed on edge devices and ensuring secure communication between devices and cloud infrastructure is critical. Additionally, physical tampering risks and challenges in applying timely security patches make securing edge AI systems more complex.

Model Optimization and Updating On-Device

One of the biggest challenges is maintaining and updating AI models deployed at the edge. Unlike cloud-based AI, where models can be updated instantly and continuously, edge AI .23…operations. Moreover, optimizing models to run efficiently without sacrificing performance is an ongoing process. Developers must design models that are adaptable to varying hardware capabilities and network conditions.

Addressing these challenges is essential for successful adoption of it. As hardware and software technologies advance, many of these hurdles are gradually being overcome, making edge AI more accessible and reliable across industries.

6. What Skills are Needed to Work in Edge and AI Fields?

Building a successful career in artificial intelligence at the edge requires a diverse set of technical skills and hands-on experience. Understanding the unique demands of edge environments is crucial for developing efficient and effective AI solutions.

Embedded Systems Knowledge

A solid foundation in embedded systems is essential. Professionals should be familiar with microcontrollers, sensors, hardware interfaces, and real-time operating systems (RTOS). This knowledge helps in designing AI applications that can run efficiently on limited hardware resources typical of edge devices.

AI Model Compression and Optimization

Since edge devices have restricted memory and compute capabilities, AI models must be compressed and optimized without significantly compromising accuracy. Skills in techniques like pruning, quantization, knowledge distillation, and using lightweight architectures (e.g., MobileNet, TinyML) are highly valuable. Understanding how to tailor models for specific hardware is key to successful deployment.

Programming for Edge Devices

Proficiency in programming languages such as Python and C++ is critical. Python is widely used for developing and training AI models, while C++ is often necessary for deploying models on resource-constrained edge devices. Familiarity with AI frameworks like TensorFlow Lite, ONNX, and tools that support edge deployment is also important.

Recommended Learning Paths

To gain expertise, enrolling in online courses focused on AI and edge computing can provide structured knowledge. Practical projects, such as building AI-powered IoT devices or working with edge AI hardware kits like NVIDIA Jetson or Google Coral, help solidify skills. Participating in open-source communities and contributing to edge AI projects can further enhance practical experience.

7. What is the Future of Artificial Intelligence at the Edge?

The future of artificial intelligence at the edge is incredibly promising, driven by advancements that are reshaping how AI interacts with data and devices outside traditional cloud environments.

Growth of Intelligent IoT

The expansion of the Internet of Things (IoT) is a key factor fuelling edge AI growth. As billions of connected devices generate massive amounts of data, processing it locally becomes essential for speed, privacy, and efficiency. Intelligent IoT devices equipped with AI capabilities will continue to proliferate across homes, industries, and cities, enabling smarter environments that respond instantly to real-world conditions.

Integration with Generative AI and Large Language Models

Emerging AI technologies, including generative AI and large language models (LLMs), are increasingly being adapted for edge deployment. While traditionally resource-heavy, innovations in model compression and hardware acceleration are making it feasible to run sophisticated AI models closer to users. This integration will enable more personalized and context-aware applications, such as advanced voice assistants and real-time content generation directly on edge devices.

Edge-Cloud Collaboration and Federated Learning

The future of AI at the edge isn’t about replacing the cloud but enhancing it through seamless collaboration. Hybrid architectures that combine edge processing with cloud resources will optimize performance and scalability. Federated learning, a technique where models are trained across multiple decentralized devices while preserving data privacy, is gaining traction. This approach empowers edge devices to learn collectively without sharing sensitive data.

Trends Shaping AI’s Future at the Edge

Key trends shaping this future include advancements in ultra-low-power hardware, 5G connectivity, and AI model optimization techniques. Additionally, growing concerns about data privacy and security will drive the adoption of edge AI as a preferred solution for sensitive applications.

Conclusion

To get started with learning edge and AI technologies, begin by working hands-on with popular edge AI kits such as NVIDIA Jetson or Google Coral. These practical projects help build a strong understanding of real-world applications. Additionally, explore online learning platforms that offer courses focused on edge computing and artificial intelligence, and engage with communities where you can share knowledge and collaborate. Developing skills in artificial intelligence at the edge opens up exciting career opportunities. Start your journey today by exploring LAI’s online courses designed to equip you with the latest AI and edge technology expertise.