AI and GPU: Why Graphics Processing Units are Essential for Deep Learning?

What is the Relationship Between AI and GPU?

The relationship between AI and gpu technologies is fundamental to the advancements we see in artificial intelligence today. Graphics Processing Units (GPUs) were originally designed to handle the complex calculations required for rendering images and video in computer graphics. Over time, their unique architecture—characterized by thousands of smaller, efficient cores designed for parallel processing—proved to be exceptionally well-suited for the heavy computational demands of AI workloads.

GPUs have evolved significantly since their inception in the 1990s. Early GPUs focused purely on graphics rendering for gaming and professional design applications. However, as AI research grew, developers recognized that the parallel processing capabilities of GPUs could accelerate the training and inference of deep learning models. This shift has driven GPU manufacturers to develop specialized GPUs optimized for AI tasks, such as NVIDIA’s Tensor Cores designed specifically for machine learning calculations.

Why do GPUs matter so much for AI workloads? Unlike traditional Central Processing Units (CPUs) that handle tasks sequentially and are optimized for a broad range of computations, GPUs excel at performing many calculations simultaneously. This makes them ideal for training neural networks, where large amounts of data and mathematical operations are processed in parallel. As a result, training times that once took weeks can now be completed in days or even hours.

Many AI tasks benefit from GPU acceleration, including image recognition, natural language processing, and autonomous vehicle navigation. Complex models like convolutional neural networks (CNNs) and transformer architectures rely heavily on GPU power to efficiently process massive datasets.

In summary, the symbiotic relationship between these technologies has propelled the field forward, enabling researchers and developers to tackle increasingly sophisticated problems with speed and precision.

How Does GPU Artificial Intelligence Acceleration Work?

At the core of gpu artificial intelligence acceleration is the concept of parallel processing. Unlike CPUs, which typically execute tasks sequentially, GPUs are designed to handle thousands of operations simultaneously. This ability to process many calculations in parallel is crucial for AI tasks, especially deep learning, where massive datasets require repeated mathematical operations such as matrix multiplications and tensor computations. Parallel processing dramatically reduces the time needed to train complex neural networks, enabling faster experimentation and deployment.

GPU Architecture vs. CPU Architecture

GPUs differ from CPUs in their architecture and purpose. While CPUs have a few powerful cores optimized for general-purpose computing and quick task switching, GPUs consist of thousands of smaller, specialized cores that focus on executing many similar tasks concurrently. This design allows GPUs to handle data-heavy AI workloads efficiently. CPUs manage the overall system operations and run instructions that require complex decision-making, but for AI model training and inference, GPUs provide the speed and throughput necessary for scalable performance.

Deep Learning Frameworks Optimized for GPUs

Popular deep learning frameworks like TensorFlow and PyTorch are explicitly optimized to leverage GPU acceleration. These frameworks include built-in support to offload intensive computations onto GPUs seamlessly, maximizing performance without requiring developers to manage low-level GPU programming. By utilizing CUDA (Compute Unified Device Architecture) or ROCm (for AMD GPUs), these frameworks make it easier for AI practitioners to train large models more quickly, fine-tune hyperparameters, and handle bigger datasets—all essential for pushing the boundaries of AI research and applications.

The combination of specialized GPU hardware and optimized software frameworks drives the rapid progress seen in AI today, making GPU acceleration a cornerstone of modern AI development.

Why are GPUs Essential for Deep Learning in 2025?

As AI models grow increasingly complex and data-hungry in 2025, the demand for powerful computing resources is higher than ever. Modern deep learning architectures, such as transformer-based models used in natural language processing and large-scale computer vision networks, require vast amounts of computation during training. GPUs are uniquely suited to handle these demanding workloads because they can process many operations simultaneously. This capability enables researchers and developers to experiment with larger models and datasets, pushing the boundaries of what AI can achieve.

Real-World Examples of GPU-Powered AI Applications

From autonomous vehicles to personalized healthcare, GPUs power many cutting-edge AI applications today. For instance, self-driving cars use GPUs to process real-time sensor data and make split-second decisions. In healthcare, GPUs accelerate the analysis of medical images for faster and more accurate diagnoses. Similarly, e-commerce platforms rely on GPU-accelerated AI to deliver personalized recommendations and fraud detection. These real-world applications demonstrate how GPUs are integral to transforming AI research into impactful solutions.

Speed, Efficiency, and Scalability Benefits

The combination of speed and efficiency makes GPUs indispensable for deep learning. Training a large AI model on CPUs alone could take weeks or months, but GPUs can reduce this to days or even hours. This acceleration helps companies and researchers iterate faster, bringing innovations to market more quickly. Additionally, GPUs support scalable solutions—from single devices to large distributed clusters—enabling AI workloads to grow alongside business needs.

The synergy of AI and gpu technologies in 2025 ensures that deep learning continues to evolve rapidly, making GPUs essential tools for both researchers and industry practitioners.

What are the Key GPU Technologies Driving AI Innovation?

The rapid advancement of AI has driven GPU manufacturers to develop models specifically optimized for deep learning and machine learning workloads. Modern GPUs, such as NVIDIA’s A100 and H100 series, are built with AI-centric features that significantly boost performance. These GPUs offer enhanced memory bandwidth, increased core counts, and specialized processing units designed to accelerate AI computations. Such hardware advancements enable faster training times, larger models, and more efficient inference, empowering researchers and businesses to tackle increasingly complex AI challenges.

CUDA, Tensor Cores, and AI-Specific GPU Features

NVIDIA’s CUDA (Compute Unified Device Architecture) platform revolutionized AI development by allowing programmers to harness GPU power through a flexible and efficient programming model. CUDA enables deep learning frameworks to optimize their code for GPU acceleration seamlessly. Another game-changing innovation is the introduction of Tensor Cores, specialized units within GPUs designed to speed up matrix operations that are fundamental to neural networks. Tensor Cores drastically improve AI training and inference speeds, making them essential for current and next-generation AI models.

Emerging Hardware Trends: TPU, FPGA vs GPU

While GPUs dominate AI acceleration today, other specialized hardware options are emerging. Google’s Tensor Processing Units (TPUs) are custom-built for AI workloads and offer competitive performance for specific tasks, particularly in TensorFlow environments. Field Programmable Gate Arrays (FPGAs) provide customizable hardware acceleration but require more specialized knowledge to program. Each technology has unique advantages: GPUs offer flexibility and widespread software support, TPUs excel in large-scale cloud deployments, and FPGAs shine in low-latency, specialized applications. Understanding these trends helps AI professionals choose the best hardware for their specific needs and stay ahead in the field of gpu artificial intelligence.

How to Get Started with AI and GPU for Deep Learning?

For those beginning their journey into deep learning, selecting the right GPU hardware is crucial. Entry-level learners can start with GPUs like NVIDIA’s RTX 3060 or 3070, which offer solid performance for training small to medium models without breaking the bank. Professionals and researchers aiming to work with large-scale models may prefer high-end GPUs such as the NVIDIA RTX 4080, A100, or the latest H100, which provide exceptional computing power and memory capacity. When choosing hardware, consider your specific needs, budget, and compatibility with your AI frameworks.

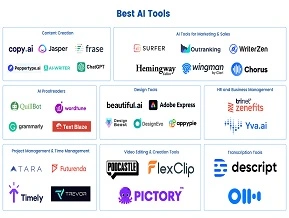

Online Courses and Certifications at LAI Focusing on AI Hardware

To build a strong foundation in both AI concepts and GPU acceleration, LAI offers a range of courses tailored for different skill levels. These courses cover the fundamentals of deep learning, GPU architecture, and how to leverage GPUs effectively for AI projects. Certifications from LAI not only enhance your knowledge but also demonstrate your expertise to potential employers. Courses include hands-on labs that teach practical skills in optimizing code for GPU performance using popular frameworks like TensorFlow and PyTorch.

Tips for Optimizing AI Projects Using GPUs

Maximizing the benefits of GPU acceleration requires more than just good hardware. Efficient data preprocessing, choosing the right batch sizes, and leveraging mixed-precision training can significantly speed up model training. Monitoring GPU utilization through tools like NVIDIA’s Nsight or CUDA Profiler helps identify bottlenecks and optimize resource use. Always keep your GPU drivers and libraries updated to take advantage of the latest performance improvements.

Embarking on your journey enables you to harness powerful computational tools that accelerate learning and innovation.

What is the Future Role of GPU Artificial Intelligence in Emerging AI Fields?

The future of AI is increasingly moving toward edge computing, where data is processed locally on devices rather than centralized servers. GPUs play a crucial role in this shift by providing the necessary computational power in compact, energy-efficient formats. Edge AI applications, such as smart cameras, drones, and IoT devices, rely on GPUs to perform real-time data analysis and decision-making without latency issues. This trend enables faster responses and greater privacy, as sensitive data doesn’t need to be transmitted to the cloud.

AI in Autonomous Vehicles, Healthcare, and Robotics

Emerging fields like autonomous vehicles, healthcare diagnostics, and robotics heavily depend on GPU-accelerated AI. Autonomous vehicles use GPUs to process sensor data, navigate complex environments, and make instant decisions. In healthcare, GPUs accelerate AI-driven medical imaging, drug discovery, and personalized treatment plans, drastically improving patient outcomes. Robotics benefits from GPUs by enabling machines to learn and adapt through deep learning algorithms, enhancing automation and precision in manufacturing and service industries.

Predictions for GPU Advancements Supporting AI Growth

Looking ahead, GPU technology is expected to evolve rapidly to meet AI’s growing demands. Innovations will likely focus on increasing processing power, energy efficiency, and integration with AI-specific hardware like Tensor Cores and AI accelerators. We can also anticipate tighter integration between GPUs and emerging technologies like quantum computing and neuromorphic chips. These advancements will empower researchers and businesses to develop more sophisticated AI models, pushing the boundaries of what machines can learn and accomplish.

The expanding capabilities of gpu artificial intelligence will continue to be a cornerstone in unlocking new frontiers in AI applications and innovations.

Conclusion

GPUs have become indispensable in driving the rapid development of AI technologies. Understanding the synergy between AI and gpu is crucial for anyone aiming to excel in deep learning and AI innovation. By mastering GPU acceleration techniques, you can significantly enhance your AI projects’ speed and efficiency. To support your journey, LAI offers specialized courses focusing on GPU hardware and deep learning frameworks, empowering you with the skills to leverage gpu artificial intelligence effectively. Start exploring these resources today and unlock the full potential of your AI ambitions.